To move forward on the path to autonomous mobility, scientists have developed camera and image sensing technologies that allow vehicles to reliably sense and visualise the surrounding environment, but these developments have encountered challenges, according to scientists from Chung-Ang University.

Cameras often get dislocated during dynamic driving, explained Professor Joonki Paik, and “camera calibration is of utmost importance for future vehicular systems, especially autonomous driving, because camera parameters, such as focal length, rotation angles, and translation vectors, are essential for analysing the 3D information in the real world.”

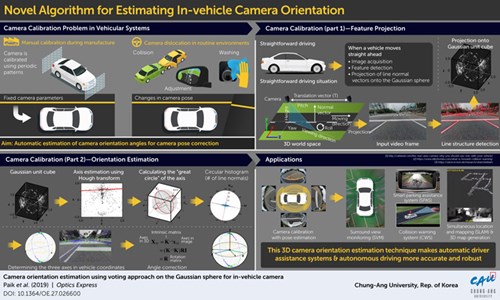

Methods to optimise camera use have included computational approaches such as the voting algorithm, use of the Gaussian sphere, and application of deep learning and machine learning, among other techniques. However, none of these methods are fast enough to perform this estimation accurately during real time driving in real world conditions, according to Paik.

To remedy the problem of speed of estimation, the Chung-Ang University team combined some of these previously developed approaches and proposed a novel method to solve the problem. Their method is designed for cameras with fixed focus placed at the front of the vehicle and for straightforward driving.

The image of the environment in front is captured by the camera, and parallel lines on the objects in the image are mapped along the three cartesian axes. These are then projected onto what is called the Gaussian sphere, and the plane normals to these parallel lines are extracted. A technique called the Hough transform, which is a feature extraction technique, is applied to pinpoint “vanishing points” along the direction of driving (vanishing points are points at which parallel lines intersect in an image taken from a certain perspective, such as the sides of a railway track converging in the distance). Using a type of graph called the circular histogram, the vanishing points along the two remaining perpendicular cartesian planes are then also identified.

The image of the environment in front is captured by the camera, and parallel lines on the objects in the image are mapped along the three cartesian axes. These are then projected onto what is called the Gaussian sphere, and the plane normals to these parallel lines are extracted. A technique called the Hough transform, which is a feature extraction technique, is applied to pinpoint “vanishing points” along the direction of driving (vanishing points are points at which parallel lines intersect in an image taken from a certain perspective, such as the sides of a railway track converging in the distance). Using a type of graph called the circular histogram, the vanishing points along the two remaining perpendicular cartesian planes are then also identified.

Paik’s team tested this method via an experiment on the road under real driving conditions. They reportedly captured three driving environments in three videos and noted the accuracy and efficiency of the method for each. They found accurate and stable estimates in two cases, but in one case the scientists witnessed poor performance of their method because of the presence of trees and bushes within the camera’s range of view.

But overall, the method is said to have performed well under realistic autonomous driving conditions. Paik and the team credited the high-speed estimation that their method can carry out to the fact that the 3D voting space is converted to a 2D plane at each step of the process.

Paik said that their method “can be immediately incorporated into automatic driver assistance systems (ADASs).” It could also reportedly be useful for a variety of alternative applications such as collision avoidance, parking assistance, and 3D map generation of the surrounding environment, thereby preventing accidents and promoting safer autonomous driving environments.

“We are planning to extend this to smartphone applications like augmented reality and 3D reconstruction,” Paik said.

About Intelligent Transport

Serving the transport industry for more than 15 years, Intelligent Transport is the leading source for information in the urban public transport sector. Covering all the new technologies and developments within this vitally important sector, Intelligent Transport provides high-quality analysis across our core topics: Smart Cities, Digitalisation, Intermodality, Ticketing and Payments, Safety and Security, The Passenger, The Fleet, Business Models and Regulation and Legislation.

Serving the transport industry for more than 15 years, Intelligent Transport is the leading source for information in the urban public transport sector. Covering all the new technologies and developments within this vitally important sector, Intelligent Transport provides high-quality analysis across our core topics: Smart Cities, Digitalisation, Intermodality, Ticketing and Payments, Safety and Security, The Passenger, The Fleet, Business Models and Regulation and Legislation.

Comments

There are no comments yet for this item

Join the discussion